AthenaHQvs AirOps: A Decision Guide for AI Workflow & Visibility Strategy

AthenaHQand AirOps are frequently compared because both sit within the AI-enabled content and growth stack.

Track AI search visibilityNo install

Updated by

5 Min Read

Updated on Feb 12, 2026

TL;DR

- AthenaHQ focuses on AI visibility diagnostics and citation tracking.

- AirOps prioritizes workflow automation and scalable SEO execution.

- AthenaHQ fits insight-driven GEO teams.

- AirOps supports production-led growth teams.

- Teams seeking unified AI search governance often evaluate Dageno.

AthenaHQ and AirOps, which is better?

If you’re researching AthenaHQ vs Airops, you’re already in the decision stage. The question is no longer whether AI search matters — it’s which infrastructure best supports your team’s shift toward AI Overviews, answer engines, and generative visibility.

Both AthenaHQ and AirOps are frequently cited in discussions around AI search tooling. AthenaHQ is commonly positioned as a visibility intelligence platform for generative engines. AirOps is often evaluated as an automation and workflow system for SEO and content teams.

Searches for “AthenaHQ vs AirOps” typically come from teams that:

- Have moved beyond traditional keyword tracking

- Need measurable AI answer presence

- Are scaling production pipelines

- Are reconsidering tooling as AI search reshapes traffic attribution

This guide does not focus on dissatisfaction. Instead, it clarifies structural differences — because these platforms solve different layers of the stack.

How to Compare AthenaHQ vs AirOps Effectively

The comparison only makes sense if framed correctly.

These tools are not feature mirrors. They operate at different layers:

1. Visibility Intelligence vs Operational Infrastructure

AthenaHQ answers:

“Where do we appear in AI answers, and why?”

AirOps answers:

“How do we operationalize content production efficiently?”

That difference alone shapes most selection outcomes.

2. Diagnostic Depth vs Execution Breadth

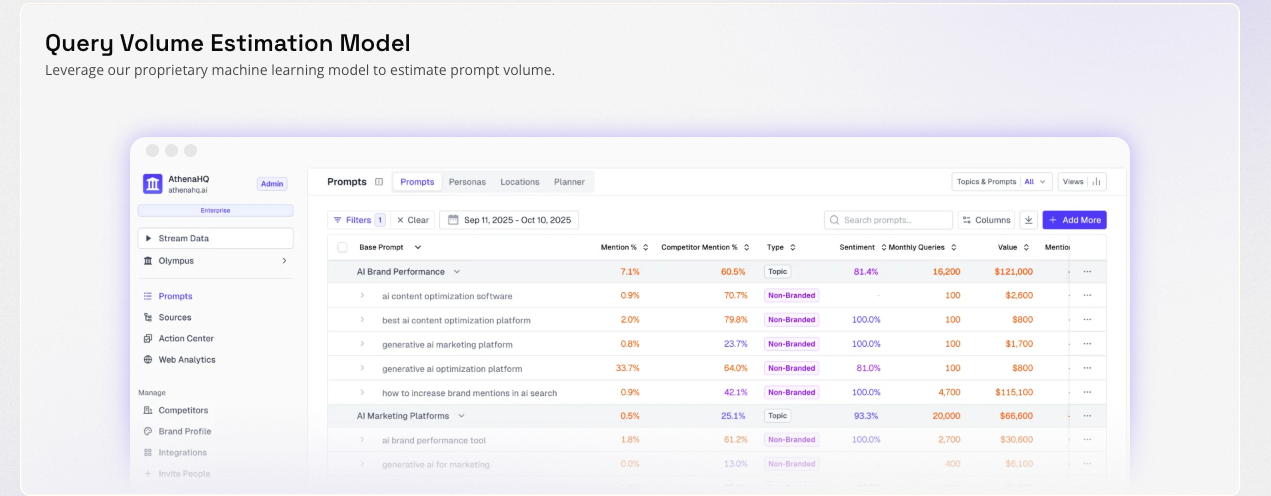

AthenaHQ provides:

- Generative engine citation tracking

- Competitive answer benchmarking

- Prompt-level visibility diagnostics

AirOps provides:

- No-code workflow builders

- Batch publishing systems

- SEO automation pipelines

If your constraint is strategic insight, AthenaHQ may be more aligned.

If your constraint is throughput, AirOps may be more relevant.

3. Insight-to-Action Gap

One recurring theme in AI search tooling:

Monitoring does not equal execution.

Execution does not equal governance.

Understanding which gap you are solving prevents tool mismatch.

AthenaHQ vs AirOps: Side-by-Side Comparison

| Dimension | AthenaHQ | AirOps |

|---|---|---|

| Core Orientation | AI search visibility tracking | Workflow automation & execution |

| AI Citation Monitoring | Deep multi-engine tracking | Limited native coverage |

| Prompt Discovery | Yes | Limited, workflow-linked |

| Content Automation | Minimal | Strong automation pipelines |

| Competitive Benchmarking | Citation-focused | SEO production metrics |

| Workflow Depth | Moderate | Advanced |

| Primary Users | Strategy & analytics teams | Growth & content ops teams |

| GEO Readiness | Visibility-centric | Execution-centric |

AthenaHQ: Where It Delivers

AthenaHQ is strongest when AI answer visibility itself is the KPI.

Key characteristics:

- Multi-engine generative citation tracking

- Prompt-level visibility insights

- Competitive presence comparison

- Trend analysis over time

This is particularly useful when:

- Reporting AI visibility to executives

- Diagnosing citation loss

- Benchmarking brand presence in answer engines

Its strength lies in analytics clarity, not workflow orchestration.

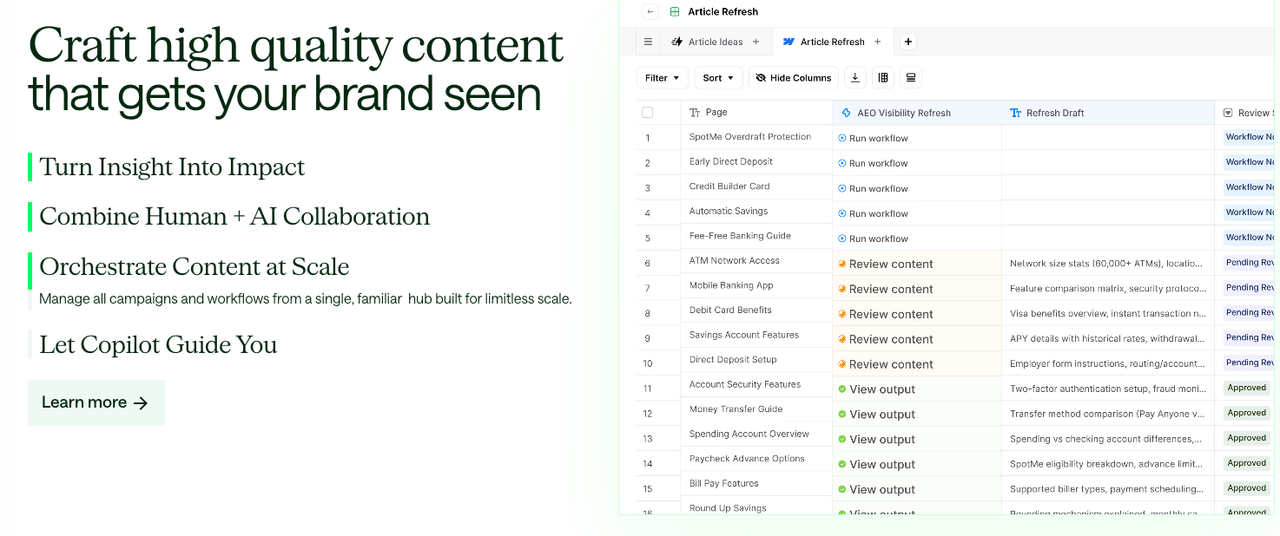

AirOps: Where It Delivers

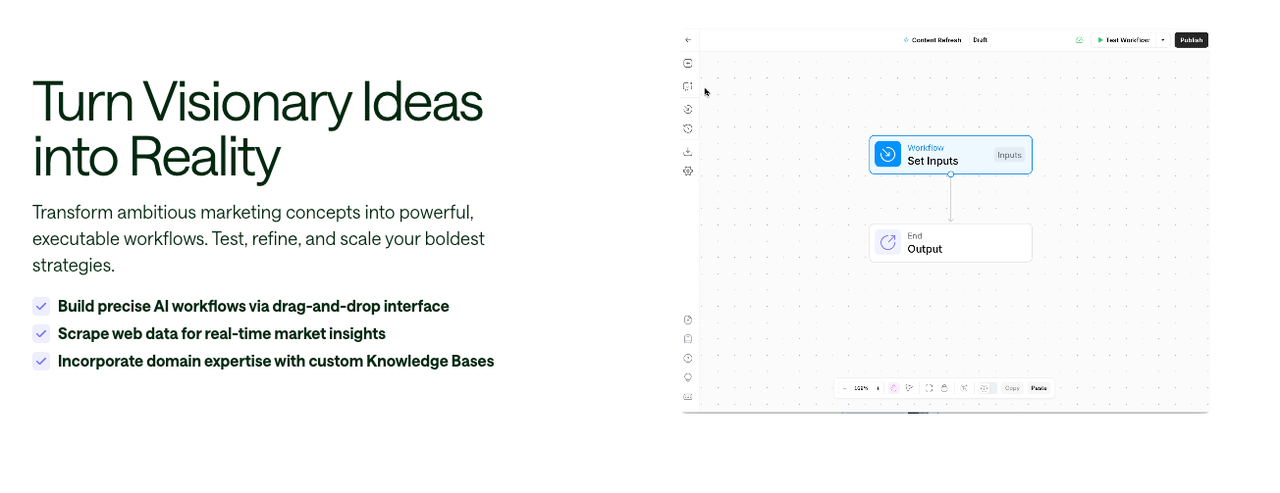

AirOps focuses on building operational systems.

Key capabilities include:

- No-code workflow builder

- Multi-step content pipelines

- CMS integrations

- Scalable programmatic publishing

AirOps becomes valuable when:

- Manual processes create bottlenecks

- Content production must scale

- SEO processes require repeatability

Its strength lies in execution infrastructure rather than diagnostic intelligence.

Real User Feedback: What Customers Say About AthenaHQ and AirOps

Beyond feature comparisons, user reviews often reveal how each platform performs under real operational conditions. While both AthenaHQ and AirOps maintain strong overall ratings across review platforms, the themes in user feedback highlight clear differences in perceived value.

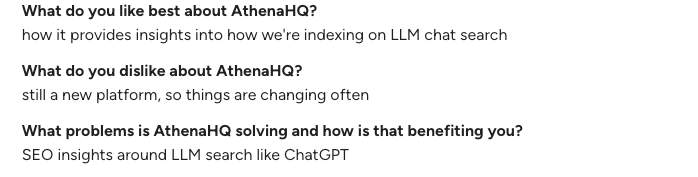

AthenaHQ — User Sentiment Overview

AthenaHQ users frequently emphasize its role as a visibility intelligence platform rather than a workflow tool.

What Users Appreciate

Clear AI Visibility Metrics

Users consistently note that AthenaHQ makes AI answer tracking tangible. The ability to see brand mentions across generative engines is often described as “clarifying” for leadership reporting and GEO strategy alignment.

Competitive Benchmarking Depth

Several reviewers mention that competitive citation tracking helps them identify visibility gaps that were previously invisible in traditional SEO dashboards.

Intuitive Reporting Interface

Many users describe the interface as straightforward, particularly for analytics teams. Dashboards are often cited as executive-ready without heavy customization.

Time Savings in Monitoring

Instead of manually testing prompts across different LLMs, teams report consolidating tracking into one monitoring layer.

Where Users See Limitations

Recommendations Require Human Interpretation

Some users indicate that while AthenaHQ surfaces insights, the “what next” still depends on internal strategy and execution capacity.

Rapid Product Iteration

Because the platform evolves quickly, some users note that features may change frequently, requiring adaptation.

Pricing Considerations

A few reviews suggest that smaller teams need to evaluate ROI carefully if their primary need is light monitoring rather than deep GEO analytics.

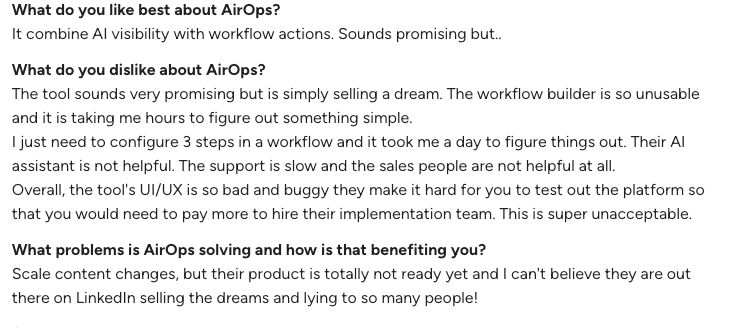

AirOps — User Sentiment Overview

AirOps feedback reflects its identity as an execution and automation platform.

What Users Appreciate

Workflow Automation and Scale

Users often highlight the ability to build repeatable content pipelines as the primary value driver. Batch editing and automation features are frequently mentioned.

CMS Integrations

Direct integrations with publishing systems are viewed as practical for reducing operational friction.

Operational Efficiency Gains

Several reviews state that AirOps significantly reduces manual content refresh cycles, especially for teams managing hundreds or thousands of pages.

Strong Fit for Content Teams

Feedback suggests that content operators, rather than analytics strategists, benefit most from the platform’s architecture.

Where Users See Limitations

Learning Curve

While powerful, AirOps requires workflow planning and internal process clarity. Some teams report an onboarding period before realizing full value.

AI Visibility Depth

Users seeking deep generative engine citation tracking often note that AirOps is not primarily built for that diagnostic layer.

Human Review Still Necessary

Automated content outputs typically require editorial review, especially in regulated or technical industries.

Interpreting User Feedback in Context

User reviews reinforce the structural distinction discussed earlier:

- AthenaHQ is perceived as a diagnostic and visibility intelligence tool.

- AirOps is perceived as an execution and workflow acceleration platform.

The difference is not about quality — both tools are positively reviewed overall — but about which constraint they remove inside an organization.

For teams evaluating AthenaHQ vs AirOps, user feedback consistently aligns with the broader architectural split:

Visibility clarity → AthenaHQ

Operational scalability → AirOps

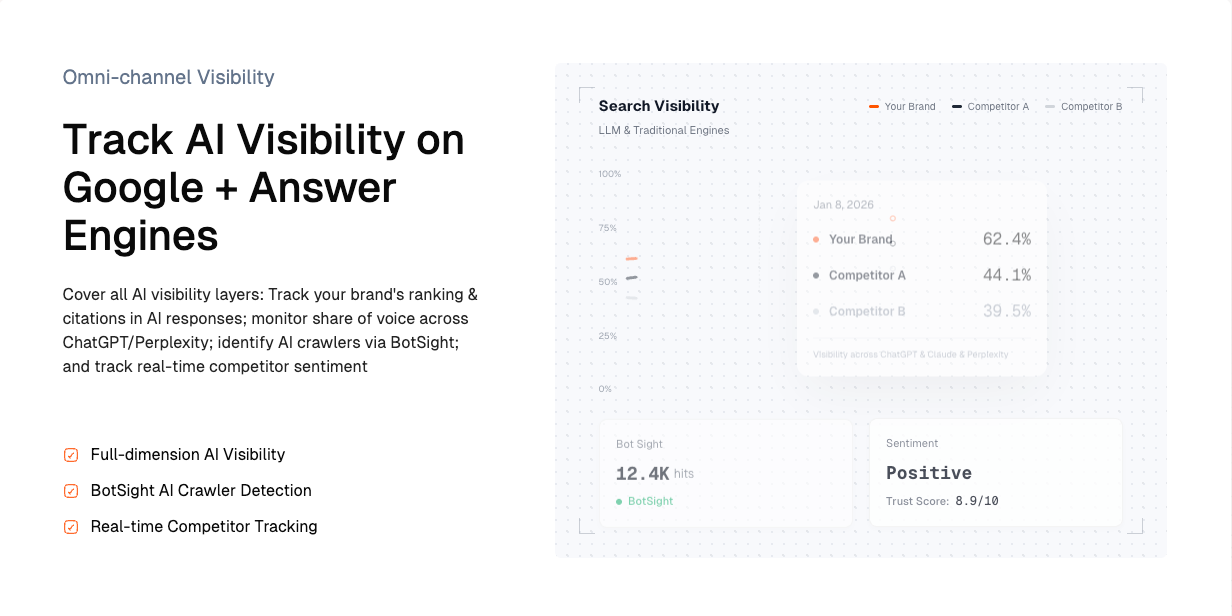

When Dageno Makes More Sense Than Standalone Alternatives

Dageno operates differently. It is positioned not as a single-function tool, but as a system designed to:

Track, analyze, and elevate your brand across traditional search engines and AEO, GEO.

Rather than separating monitoring and execution, it integrates both under one governance layer.

1. Track AI Visibility on Google + Answer Engines

Dageno includes:

Full-dimension AI Visibility

Track brand presence across:

- Google AI Overviews

- LLM-generated answers

- Traditional SERPs

This provides unified visibility reporting rather than siloed AI-only metrics.

BotSight AI Crawler Detection

Identify AI crawler behavior to understand:

- Which engines access your content

- How frequently AI systems crawl your pages

This bridges technical SEO signals with AI exposure.

Real-time Competitor Tracking

Monitor shifts in:

- Citation dominance

- Answer frequency

- Competitive AI presence

This moves beyond static monthly reporting into continuous intelligence.

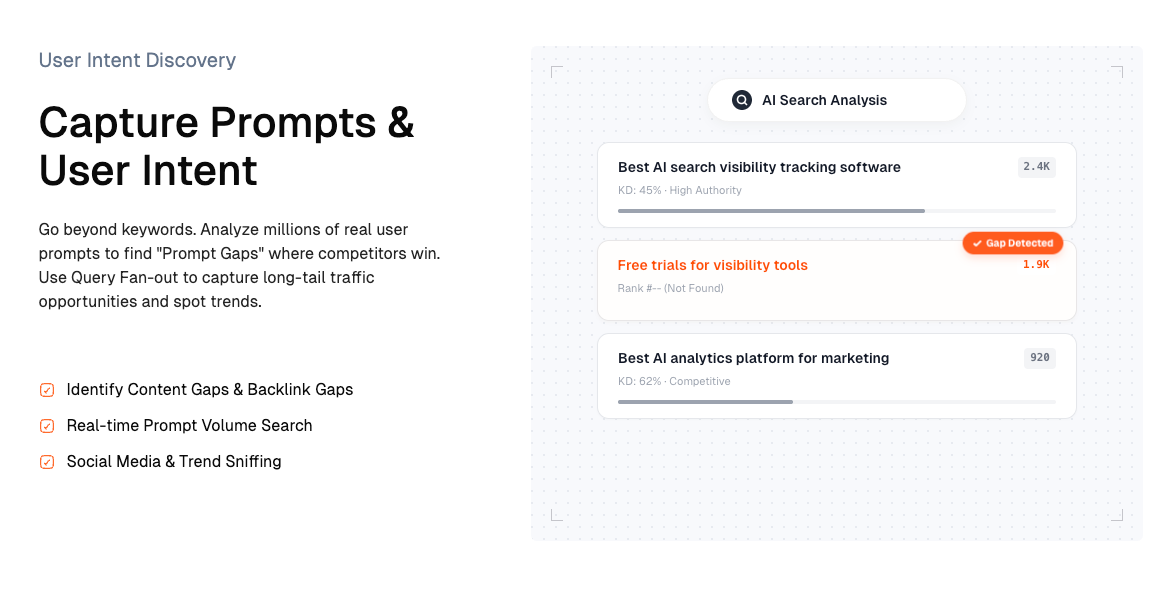

2. Capture Prompts & User Intent

Traditional SEO tools revolve around keywords.

AI search revolves around prompts.

Dageno includes:

Identify Content Gaps & Backlink Gaps

Reveal where competitors dominate high-impact prompts and linked authority.

Real-time Prompt Volume Search

Analyze prompt demand instead of relying only on keyword data.

Social Media & Trend Sniffing

Surface discourse trends that influence generative answers before they scale.

This creates alignment between intent discovery and AI answer exposure.

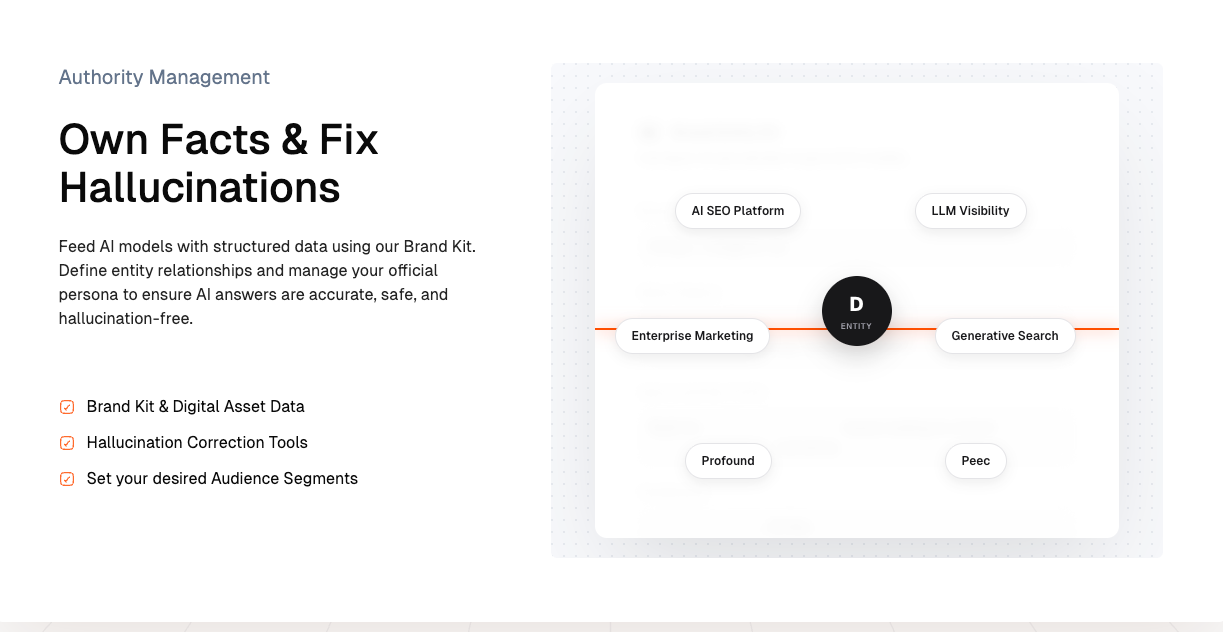

3. Own Facts & Fix Hallucinations

AI visibility is not only about inclusion — it is about accuracy.

Dageno includes:

Brand Kit & Digital Asset Data

Centralize authoritative brand information to influence structured representation.

Hallucination Correction Tools

Enable structured correction workflows when AI outputs inaccurate brand information.

Audience Segment Control

Influence how narratives appear across different user segments.

This governance dimension is typically absent in standalone monitoring or workflow tools.

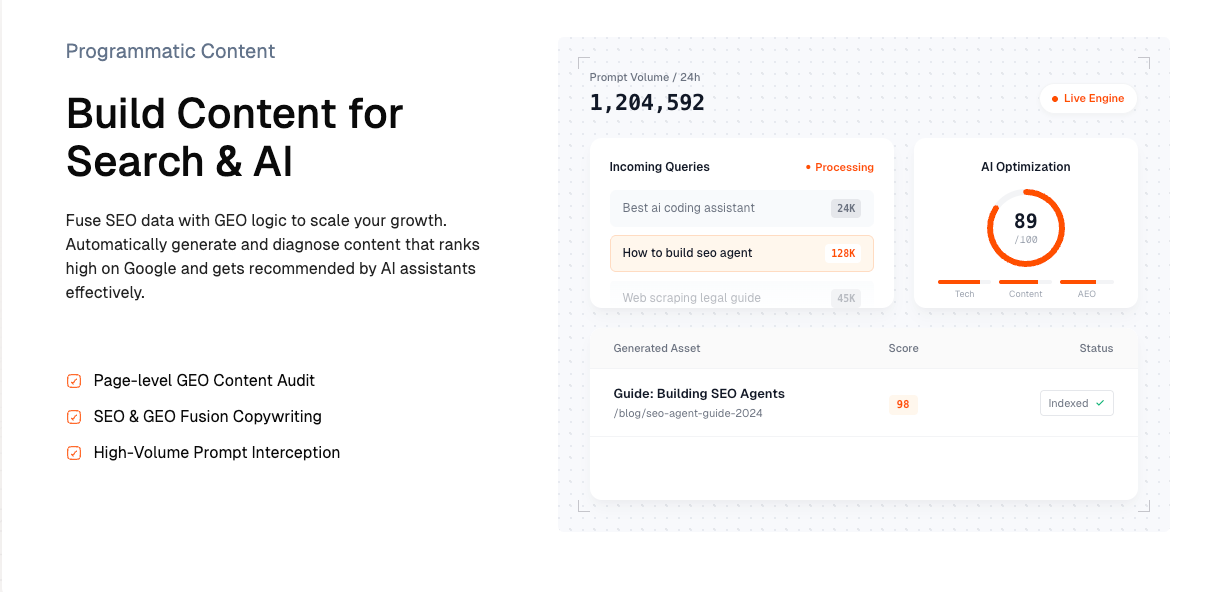

4. Build Content for Search & AI

Rather than separating SEO from GEO, Dageno merges them.

Page-level GEO Content Audit

Assess whether pages are structured for generative inclusion.

SEO & GEO Fusion Copywriting

Align ranking optimization with AI citation probability.

High-Volume Prompt Interception

Target prompts with high answer frequency.

This reduces the lag between insight and content execution.

Final Verdict|Which Alternative Should You Choose?

Choose AthenaHQ if:

- Your priority is AI answer visibility diagnostics

- You need executive-ready generative reporting

- Competitive citation tracking is your primary KPI

Choose AirOps if:

- You are scaling content operations

- Workflow automation is your constraint

- Execution speed matters more than deep AI analytics

If your goal is not just switching tools but building a sustainable GEO and AI search strategy, Dageno is often the more practical choice.

It integrates:

- AI visibility tracking

- Prompt intelligence

- Hallucination governance

- Content alignment

- Automated execution

In one system.

The right decision depends on where your constraint lies:

- Measurement bottleneck → AthenaHQ

- Execution bottleneck → AirOps

- Governance bottleneck → Dageno

FAQ

1. Is AthenaHQ better than AirOps for AI search?

AthenaHQ is stronger for AI citation visibility and generative monitoring. AirOps is stronger for workflow automation and scalable execution.

2. Can AirOps replace AthenaHQ?

Not directly. AirOps focuses on production systems, not deep generative visibility tracking.

3. Do teams use multiple tools together?

Yes. Some teams combine visibility monitoring and workflow automation, though this increases stack complexity.

4. When should you consider a system-level GEO platform?

When monitoring, correction, prompt intelligence, and execution need to operate inside one strategic framework rather than across fragmented tools.

References

- Scalenut – AthenaHQ vs AirOps

- AirOps Official Comparison Page

- G2 – AthenaHQ Reviews

- G2 – AirOps Reviews

- Rankability – AI Search Visibility Tools Review

Summary

AthenaHQ and AirOps represent two different layers of the AI search stack. AthenaHQ focuses on visibility intelligence—tracking where and how your brand appears across generative engines and AI answers. AirOps centers on workflow automation and scalable content execution, helping teams operationalize SEO and publishing systems efficiently.

The choice between AthenaHQ vs AirOps depends on your primary constraint. If you lack clarity on AI citation presence and competitive visibility, AthenaHQ is the logical starting point. If production scale and process automation are your bottlenecks, AirOps addresses that need. Teams seeking tighter alignment between monitoring and execution may also evaluate system-level platforms like Dageno to consolidate governance, visibility, and action within a unified AI search strategy.

About the Author

Updated by

Richard

Richard is a technical SEO and AI specialist with a strong foundation in computer science and data analytics. Over the past 3 years, he has worked on GEO, AI-driven search strategies, and LLM applications, developing proprietary GEO methods that turn complex data and generative AI signals into actionable insights. His work has helped brands significantly improve digital visibility and performance across AI-powered search and discovery platforms.